While doing TDD/BDD and writing automated tests for several years, I've tried to identify ways to get most out of them. It seems to come down to deciding how much and what to test.

What to test?

Tests are immensely useful for upholding software quality and contribute to our ability to deliver high quality software on time to our clients. 100% code coverage for applications is not however requirement, and should not be pursued (libraries being a different thing entirely). Everything does not need to be unit tested.

Most important thing to test is business logic. Is the user order correctly persisted? Does this calculation work as intended with all possible inputs? Usually business logic is easily identified, and is readily isolated from other code for easy testing. Another important thing to test is error handling logic. Are the exceptions raised when they should be? Does this logic fail as expected?

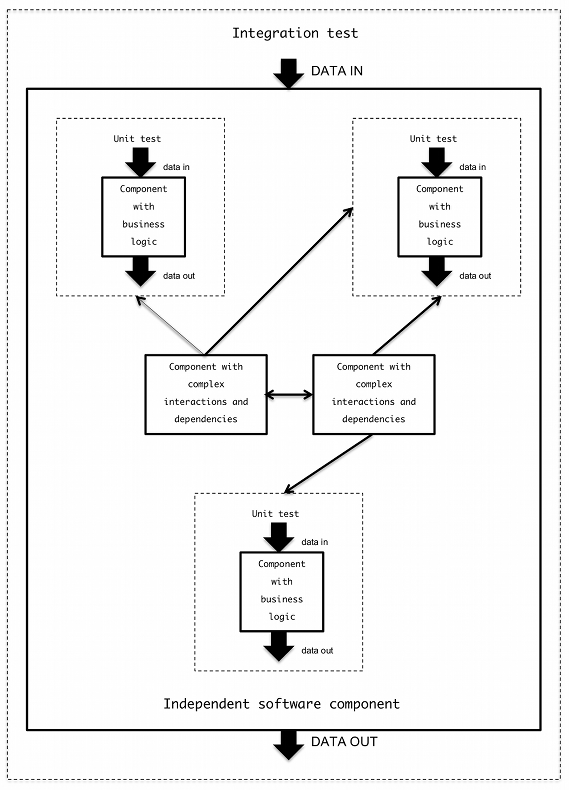

Developer should be able to count on libraries/third party software work as intended. Unit testing generally is not worth the effort, if unit is highly dependent on other units so that mocking requires huge amount of bootstrap code. In my experience, most efficient tests are integration tests, because of their broad coverage of entire software stack with as little code as possible. Integration tests usually achieve the same coverage as individual unit tests with considerably less effort.

Integration testing does not necessarily mean starting from the topmost layer and testing the whole stack down to bottom. Integration testing can be done on code deeper in the stack, so that we observe just what comes out (e.g. is saved to database) with this input.

Picture 1. Mix unit and integration tests to get adequate coverage while having easy to maintain tests.

Most important things to test

- Business logic: Usually this concerns processing the information and making sure that the program does the right thing with the data.

- Error handling: Are the exceptions raised when they should be? Does this logic fail as expected?

Not so important things to test

- Framework/library functions: Does this framework/library do what it is supposed to do? Does router route correctly? Are validators validating correctly? Do getters/setters get/set?

- Unit testing and mocking complex dependencies of some software layer.

Unit testing highly dependent code is usually not worth the effort. First of all, those are difficult to set up so, that you mock every dependency. Second, they are very fragile when it comes to refactoring the code. Ideally, tests help with the refactoring by checking that with same input data we get same output. Unit tests on code with complex dependencies break easily and refactoring effort easily takes double the time because tests have to be fixed also.

It's the data, stupid

So, to summarize: Concentrate on testing the data. Business logic involves aggregating, processing and storing the data right. This is they key part of the application. Write tests that treat complex code as black box: Put data in and see what comes out. Ignore the internal processes, but see that the code handles error situations correctly.

The point is to get maximum utility out of as little amount of tests as possible. Having less tests means less code to upkeep. And in a big project, having lean test suites that don't take ages to run through is very desirable.